-------------------------------------------

How to make a presentation? Effective presentation - Duration: 12:16. For more infomation >> How to make a presentation? Effective presentation - Duration: 12:16.

For more infomation >> How to make a presentation? Effective presentation - Duration: 12:16. -------------------------------------------

Trump Clears The White House In Staggering Move To Make Way For Special Guests He Wants To Pray With - Duration: 4:09. For more infomation >> Trump Clears The White House In Staggering Move To Make Way For Special Guests He Wants To Pray With - Duration: 4:09.

For more infomation >> Trump Clears The White House In Staggering Move To Make Way For Special Guests He Wants To Pray With - Duration: 4:09. -------------------------------------------

How to Make Dosa | Popular Indian street Food | BD daily - Duration: 2:02.

How to Make Dosa | Popular Indian street Food | BD daily

-------------------------------------------

Trump Clears The White House In Staggering Move To Make Way For Special Guests He Wants To Pray With - Duration: 4:12.

Trump Clears The White House In Staggering Move To Make Way For Special Guests He Wants

To Pray With Sutherland Springs, Texas is a community that

will forever be changed and the members of First Baptist Church while battered and bruised,

they are far from broken.

The White House has invited members of the Texas church where a two dozen people perished

last year to Washington for the National Day of Prayer.

The prayer service at the White House comes just two days before the six-month anniversary

of the church shooting that took the lives of 26 members of the First Baptist Church

of Sutherland Springs.

The surviving members of the heartbreaking incident were children with one just a few

short weeks from birth.

Another 20 members were injured.

Multiple members of the same family perished and are still very much healing and grieving.

The members who will be traveling to the nation's capital for the prayer service include Pastor

Frank Pomeroy and his wife, Sherri.

Pomeroy states both he and his wife will be speaking at the White House the day before

and then praying at Thursday's service.

Sherri describes the invitation as an honor.

The Pomeroys' own 14-year-old daughter Annabelle was among the 14-year-old victims on that

fateful November 5th day.

Pomeroy also spoke of the church's plans to hold a memorial on this coming Saturday

to honor victims and survivors.

A groundbreaking ceremony will immediately follow for the new Sutherland Springs church

building to be built next to the old church.

The old church will currently and forever sit as a memorial for those lost loved ones.

The church will be built of stone, symbolizing the strength and grit of a community that

has been forever changed.While it was hard to comprehend the incident, Pastor Pomeroy,

who lost his own daughter in the shooting, reminded his congregation to put their faith

in the Lord.

"You lean in to what you don't understand, you lean into the Lord," Frank Pomeroy told

reporters during a press conference.

"I don't understand but I know my God does."

Also speaking along with shooting survivor David Colbath, his son Morgan Colbath, and

Sherri Pomeroy's sister Sylvia Timmons will participate in a prayer service with the president

for the National Day of Prayer, the newspaper reports.According to the Dallas News –

"We did not expect a personal invitation to the White House from the President,"

Sherri Pomeroy told the Express-News via text message.

"When I received that email, I confirmed the legitimacy of the invitation and accepted,

of course!"

The five Sutherland Springs visitors will be attending the National Day of Prayer's

evening services at the Capitol's historic Statuary Hall.

The Pomeroys will be interviewed by Pastor Ronnie Floyd, president of the National Day

of Prayer Task Force, and Frank Pomeroy will lead a short prayer — all live-streamed

across the country.

They'll also attend a private banquet the night before hosted by the Task Force.We are

very honored that we are invited to the White House and asked to pray for our nation in

this historic place," Sherri Pomeroy said.

"While this invitation is bittersweet because of the events that brought us to this place,

may we never turn down a forum to share the gospel of Jesus Christ wherever it takes us."

The National Day of Prayer will be held in Washington DC's historic Statuary Hall,

and is an annual observance held on the first Thursday of May, inviting people of all faiths

to pray for the nation.

It was originally created in the year 1952 by a joint resolution of the United States

Congress.

It was signed into law by President Harry S. Truman.

-------------------------------------------

Autopilot Money Making System 2018 - How to Make Money Online - Duration: 3:41. For more infomation >> Autopilot Money Making System 2018 - How to Make Money Online - Duration: 3:41.

For more infomation >> Autopilot Money Making System 2018 - How to Make Money Online - Duration: 3:41. -------------------------------------------

4 Ways to make a man feel special - Duration: 1:19. For more infomation >> 4 Ways to make a man feel special - Duration: 1:19.

For more infomation >> 4 Ways to make a man feel special - Duration: 1:19. -------------------------------------------

Bees struggle to make it through tough spring - Duration: 1:16. For more infomation >> Bees struggle to make it through tough spring - Duration: 1:16.

For more infomation >> Bees struggle to make it through tough spring - Duration: 1:16. -------------------------------------------

How to Increase Retail Sales: Three ways to make your Facebook ads more effective - Duration: 3:52.

Hi Guys, it's Greg here from Rain Retail and in this video

we're going to show you how to increase retail sales with three quick tips to make your Facebook ads more effective.

According to Adespresso, "since 2015

worldwide social ad spent has increased by several billions of dollars, with Facebook ranking in the vast majority of that number."

Furthermore, as

indicated by LinkedIn,

"Marketers are continuing to increase their spending on Facebook ads as the platform becomes more and more effective." All this new advertising

translates to stiffer competition when it comes to attracting user attention. In general

it's said that you only have three seconds to get a users attention before they scroll past your ad in the news feed.

So here are three quick tips from Cox Media Group to get users to stop, read, and click your ad.

Number One:

Select photos that tell a story.

Since Facebook announced its preference for visual content, a change based on existing user behavior,

photo ads have increased in popularity. To stand out from the competition look for pictures that tell a story.

Nike excels at this by creating ads with images of athletes in mid action like the one here of LeBron James in midair

during a monstrous dunk. When users see these powerful images

they're more likely to stop and linger

because the photos stand out and help to establish a real connection with them. Another thing to consider is that there is a

particular group of cells in our brains that fire only when we see a face. According to Adespresso, "it is a phenomenon

that's deeply ingrained in our brains, a vestige of our primal beings-- so use it in your facebook ads!"

Number Two: Take advantage of video. Video is growing in popularity as an advertising medium because of its effectiveness.

According to Forrester, "One minute of video is equivalent to 1.8 million words of text in the message

communicated." In addition to conveying a lot of content in a short amount of time,

consumers tend to remember the videos they watch. According to the Online Publishers Association,

"80% of users recalled watching a video in the past month and

46% of them followed up the message with looking for more information or visiting the advertisers website."

Which means that video is clearly engaging consumers and

forging connections with the brands that are taking advantage of this popular advertising media. When creating videos for your audience

pay special attention to format,

storytelling, and

platform. Your brand or product should be included in the first few frames

and you shouldn't make people click to get the message or call to action. Lastly, make sure your video

demonstrates how your product or service solves the customers problem.

Number Three: Prevent ad fatigue.

Facebook ad fatigue occurs when your ads target audience is shown your ad too many times and

your click-through rate drops as your frequency rate, how many times

it's seen,

increases. To make sure your ads don't fall into the ad fatigue rut you need to refresh your ad

creative every month. Use Facebook Ads manager to monitor frequency actions and CTR so you know which ads are performing at the level

you'd like them to and which ones need to be tweaked or

Rotated out. And remember the most effective Facebook ads are those that are able to make a connection between a brand and its intended audience.

Using these three steps will fine-tune your Facebook ads and help you to keep your audience's focus right where you want it.

Guys thanks for watching this video. To see more tips and strategies aimed at helping you succeed in retail, just click the link below. And

if you found this video helpful, then please give us a big thumbs up and be sure to hit that subscribe button.

I'll see you next time.

-------------------------------------------

Underground Root Cellar Easy Plans-Unique Root Cellar-How To Build A Root Cellar In Your Basement - Duration: 2:44.

Have you ever thought about living without electricity, internet or mobiles?

We can guarantee that many people can never imagine this kind of scenarios.

However, there are chances that this type of conditions arises in your life due to flooding,

tornadoes, draught or even war.

How could you survive in this type of dangerous condition?

We believe you should stay prepared by learning the essential skills needed to deal with these

disasters.

Easy Cellar has a secret that assisted our grandfathers or forefathers to stay protected

against the economic crises, famines, and other similar conditions.

Easy Cellar is an incredible program that will prepare you to survive in dangerous conditions

with a minimum supply of the resources and help you protect your family.

The author of this guide is Tom Griffith has great experience and skills to deal with these

types of scenarios.

Author Tom has tested this program for making sure the readers can survive in the worst

conditions.

Moreover, your children can follow this program and find out the ways to make their own resources

without any need to search in dustbins.

Furthermore, it is boosted by a 60 day money back guarantee that make sure you will get

enough time for reading this program and know its authenticity.

Click the link in the description below this video for details.

After reading this guide, you will learn about the ways to preserve food and water all year

round to stay prepared for the worst conditions.

In short, we can say that the quality of your life will improve to a significant level.

The author has shown the drastic differences between the lifestyle of the modern man and

their ancestors.

As we all know, the people in the past lived their life in a practical way without the

technological facilities in the present world.

The main motive of Easy Cellar is to tell you the secrets that can make to easily withstand

emergency conditions and protect yourself and your family.

Easy Cellar can be easily defined as one of the best and comprehensive life-saving guides

available in the market.

It is based on the principle to help you stay prepared for dealing with all types of situations.

You will be able to discover the secrets to creating cellars in your backyard where you

can keep food and water.

They are created by using a different technique so that your resources stay unspoiled for

many months.

These bunkers can be built by using a minimal amount of material and effort.

There is also a step by step video guide included in this guide to create the Easy Cellar.

It also explained 15 natural remedies for radiation that will be beneficial in many

different manners.

Your body will get the essential nutrients needed for working properly in the emergency

conditions.

The guide will make sure that you are never short of eating and drinking resources even

in the worst scenarios like a flood, tornadoes, wars, and so on.

Click the link in the description below this video for details.

-------------------------------------------

Malloy set to make major jobs announcement - Duration: 0:14. For more infomation >> Malloy set to make major jobs announcement - Duration: 0:14.

For more infomation >> Malloy set to make major jobs announcement - Duration: 0:14. -------------------------------------------

How to make Paper Hat for Girls (Origami Hat) - Adorable Paper Crafts - Duration: 5:01.

Dear Paper Crafts Lover, Welcome to Paper Hat making instructions

You will need 2 pieces 20cm x 10cm paper

Please follow the instruction step by step for making a best origami hat

Make two unites same

Now you will need 0.7cm x 25cm paper and some decorative pearl.

-------------------------------------------

[WancoBeads] How to make Mini Pig - Duration: 25:35.

Beads abacus 4mm yellow 67 Beads abacus 4mm pink 4 Beads round 4mm black 2 Nylon string 0.23mm(dia.) 120cm 1

At first, Add 5 beads(pink, pink, pink, yellow, pink) to red nylon string

It crossed in the last addition beads

The number of beads that are in a ring is 5

Pick up 1 bead(pink) in red nylon string

Add 2 beads(yellow) to blue nylon string, It crossed in the last addition beads

The number of beads that are in a ring is 4

Add 1 beads(yellow) to blue nylon string, Add 3 beads(black, yellow, yellow) to red nylon string, It crossed in the last addition beads

The number of beads that are in a ring is 5

Add 2 to blue, cross

The number of beads that are in a ring is 3

Pick up 1 in blue

Add 3(yellow, black, yellow) to red, cross

ring (5)

Pick up 2(pink) in red

Add 1, cross

ring (4)

Add 3 to red, cross

ring (4)

Pick up 1 in red

Add 2 to blue, cross

ring (4)

Pick up 1 in blue

Add 2 to red, cross

ring (4)

Pick up 1(black) in red

Add 2 to blue, cross

ring (4)

Pick up 1 in blue

Add 3 to blue, put the left ear

Add 3 to red, cross

ring (5)

Pick up 1 in red

Add 3 to blue, cross

ring (5)

Pick up 1 in blue

Add 3 to blue, put the right ear

Add 3 to red, cross

ring (5)

Pick up 2(black, yellow) in red

Add 1, cross

ring (4)

Pick up 1 in blue

Add 3 to red, cross

ring (5)

Pick up 1 in red

Add 2 to blue, cross

ring (4)

Pick up 2 in blue

Add 2 to red, cross

ring (5)

Pick up 1 in red

Add 2 to red, cross

ring (4)

Pick up 1 in red

Add 3 to red, cross

ring (5)

Add 3 to blue, cross

ring (4)

Pick up 3 in blue

Add 1, cross

ring (5)

Pick up 1 in blue

Add 2 to red, cross

ring (4)

Add 3 to red, cross

ring (4)

Pick up 3 in red

Add 1, cross

ring (5)

Pick up 2 in blue

Add 1, cross

ring (4)

Pick up 1 in blue

Pick up 1 in red

Add 2 to blue, cross

ring (5)

Pick up 2 in blue

Add 1, cross

ring (4)

Pick up 4 in blue

Pick up 1 in red, cross

Pass the red nylon string to the position of the tail

Add 1 to red, put the tail

Pass the red nylon string to the position of the right hind leg

Add 1 to blue, put the left hind leg

Pass the blue nylon string to the position of the left fore leg

Add 1 to blue, put the left fore leg

Add 1 to red, put the right hind leg

Pass the red nylon string to the position of the right fore leg

Add 1 to red, put the right fore leg

Pass the red nylon string to the position of stomach

Tie the nylon string

After connecting, through the nylon string to 2 or 3 beads

Cut a little pull state the nylon string

It was completed

Use 3mm of acrylic beads

-------------------------------------------

Marble Cake Recipe - Duration: 13:01.

In this video I'm sharing with you how to make an outstanding marbled cake

using my homemade chocolate buttermilk cake and my vanilla or yellow cake

recipe. Yiu'll see one way to fix a lopsided cake and fill the layers with a

simple whipped cream with sliced peaches.

I'm going to show you today how I make a marble cake. First make sure that you

prepare your pans ahead. Now I do have a video on preparing cake pans, but I will

just tell you what I do, I put Crisco shortening around the entire pan, and you

can use a spray if you want, and then I put a little dusting of flour in there,

and I get all the excess out, and then I cut a piece of wax paper to fit the

bottom. And that way your cake is guaranteed not to stick. Now I made two

batters I made a yellow cake and I made a chocolate. Now the chocolate is on my

channel. It's my chocolate buttermilk cake. The yellow cake is not yet on my

channel. it's on my website weddingcakesforyou.com and you can also use my one

two three four cake which is also called vanilla cake, and it's also called my

wedding cake recipe. So the first thing you want to do is is fill your pans a

little bit with the yellow or the white cake whatever one you decide to use.

And you're going to do that for each cake.

And I do get a lot of questions about marble cake, so hopefully this will help.

Then I'm going to take the chocolate then I'm just going to put a little bit

in there.

And I'm gonna take a knife,you can use a spoon or a knife, I'll use a spoon. And

just run the spoon through and swirl the chocolate.

Don't overdo it though, because you don't want it to be too muddy. That's good.

This pan was a little bit less full so I'm going to use the rest of the white in

here.

So that's what it looks like, and I'm gonna pop it in the oven. In this case

I'm gonna do I used 325 most of the time with my oven

for my cakes and it's a convection oven. And bake those until they are somewhat

firm to the touch. So as you can see when I push down on it it bounces back a

little bit. And you can also use the toothpick method which is you put a

toothpick in the center and if it comes out clean that means your cake is done.

I'm going to add a little more chocolate to this one.

Okay here we go. I'm gonna pop them in the oven. Cakes are

done. Here's what they look like when they come out. Beautiful. Now if it sinks

a little bit like this one here is sunk in a little bit, it's fine. If it was

sunken deep then I would worry but it's fine. These are going to be delicious.

For the marble cake I'm using a whipped cream and a peach filling. I'm

using some peaches that I got at the grocery store in a jar and I'm gonna go

ahead and strain out the juice. The peaches are not in season right now so I

went with the nice,, I went with a really good-quality cling peach that's already

sliced and stored in syrup. I'm gonna set that aside. For your whipped cream

simply just put your whip on there. This is for a ten inch cake.

I'm going to go ahead and do the whole two cups it's gonna be probably more

than I need.

About a half, I have 1/3 cup of sugar here confectionery sugar. I'm gonna put

like half of that in there and a little bit of vanilla. I'm just gonna put a

couple of dollops in there and whip that up. I tasted it and it needs more

sugar so I'm putting the full 1/3. I just cut my peaches and what I did, sorry the

camera wasn't on and I thought it was. I just cut them in, here's what the

original thickness was, and I just cut them in thirds basically, lengthwise. and

then I just cut them in half. So here's the size and the thickness that I want

for these peaches. I cut this one out of a bigger board because

I didn't have any in the house. Got my cakes here. So the cakes

were frozen, there's they're not solid frozen they're just very very chilled.

They're a little bit frozen but not completely. They've been in there

overnight. I'm gonna leave them as a thicker layer and just do one thick

filling. And the sticky side is gonna go down. This is the sticky side that's on

top and it's now at the bottom. You can always go back in trim if you need to

trim the cardboard which I will probably do.

I'm just gonna get this one ready. Just gonna see how this might best fit. My

cakes came out a little uneven. So that looks pretty good right there. And what I do

a little trick, this is kind of a little tip, it's still not perfect. Let me see if

I can get that better. It's definitely lopsided. In a case like this I would

either trim the cake or I would add a little more butter cream on the side

that is off, which is what I'm gonna do, So just to mark it I'm just gonna put a

little line here with the knife.

Little notch. this is where I want it to be. Where I want to match it up. Okay so I

have my... You want to put buttercream into a pastry bag. I'm using a I think it's a

4B tip so any kind of a big tip you don't have to it doesn't have to be a 4B.

It could be just a round tip and you're just going to put a border all the way

around. Now I'm going to make it I'm going to come back around make it even

thicker on this side because I have a little bit of a lopsided cake here. And

then I'm going to fill. So you want to fill this area here with whipped cream.

Alright I'm gonna make this a little higher because I want to put more

whipped cream in there. And this dam will help keep the whipped cream in place.

So I've got my notch I'm going to line them up.

And look at that came out nice and straight.

So here's the finished cake. I'm working on creating a tutorial on how to do

these textured buttercream paintings on the side of a cake like this one and

this beautiful soft pastel version. Thank you so much for being here I really

appreciate it and if you haven't done so already subscribe to my channel and

click the little notification bell so you'll know when I upload all my videos.

And I also want to remind you I do have a book that's called Wedding Cakes with

Lorelie Step by Step and it has all my best recipes as well as how to make a

wedding cake. So thanks again for being here and I'll see you soon bye bye

-------------------------------------------

How To Make An Eye Dropper Water Purification Tips for Emergencies - Duration: 1:20.

how to make an eye dropper water purification tips for preppers emergencies how to purify water with bleach

hi it's AlaskaGranny you know how we're told a few drops of bleach added to

water can help purify it well what if you're in prepper an emergency situation and you

don't have an eyedropper how can you make one to purify water in emergencies with bleach it can be as simple as a ball

of cotton string and some kind of little lid cut off a piece of the string wind it

around into the lid and let a little piece of it hang over now fill your lid

with bleach and just be patient you can see if you will just be patient the bleach

will run down the string and drip one drop at a time right off the end of your

cord 2-4 drops of bleach per quart of water to purify wait 30 minutes

make sure when you're prepping for emergencies stocking your bug out bag emergency kit prepper stockpile along with your bleach have

an eyedropper or some cotton string or even jute twine to purify water one of the great ideas

about being a prepper is using the things you have to make the things that you need

learn more at alaskagranny.com please subscribe to the AlaskaGranny channel

-------------------------------------------

Тайны Коко-Как резать бумагу по-мексикански/Субтитры/Coco-How To Make Papel Picado - Duration: 2:20. For more infomation >> Тайны Коко-Как резать бумагу по-мексикански/Субтитры/Coco-How To Make Papel Picado - Duration: 2:20.

For more infomation >> Тайны Коко-Как резать бумагу по-мексикански/Субтитры/Coco-How To Make Papel Picado - Duration: 2:20. -------------------------------------------

District attorney said he never asked to make changes to police report - Duration: 1:30. For more infomation >> District attorney said he never asked to make changes to police report - Duration: 1:30.

For more infomation >> District attorney said he never asked to make changes to police report - Duration: 1:30. -------------------------------------------

Watch how to make the Pastel Flower Bead Necklace by Fusion Beads. - Duration: 7:02.

- [Cody] Hi, welcome to Fusion Beads.

Today, I'm gonna show you how to make

this cute little pastel flower necklace.

So, let's get started.

(upbeat music)

OK, this necklace features

five millimeter Swarovski flower crystal beads

in violet, aquamarine, peridot, jonquil, and light rose.

We have some size 11 silver plated metal seed beads.

Then we have this .8 millimeter silver beading chain.

I've got a 12 millimeter silver lobster claw clasp.

And then I have these little silver,

thin fold-over cord ends.

I've got six millimeter eight gauge silver closed jump ring.

And then I've got two five millimeter

19 gauge silver open jump rings.

For my tools today, I will just be using

two chain nose pliers.

You can find links to all these beads and supplies

in the description below.

So, let's get started.

OK, so to begin,

I go ahead and I take my chain,

and the length of chain that you will have

is already cut to 18 inches, so you don't need to cut it.

So to begin, I'm just gonna pick up one

of the silver seed beads and slide it on the chain.

So tiny, sometimes it's hard to get them started.

There we go.

I'm just gonna kind of bring it to the middle.

I haven't put my cord in on the other side,

so I wanna be careful not to pick it up,

so that it slides off.

Then I'm going to pick up one of the violet flowers,

and then I'm gonna pick up another seed bead.

'Cause I'm gonna use these little seed beads

as a little spacer between each of my flowers.

And then I'm gonna pick up the aquamarine bead

and just slide it on here.

And then another spacer.

And then the peridot.

And then another spacer.

Sometimes these little guys are really tough to get

because they're so tiny.

There we go.

And then jonquil.

Little flower bead.

And then one more spacer.

And then the last flower bead, which is the light rose.

And then you end with a spacer.

Alright, so now I've got them on there.

And it looks pretty cute and sweet.

So now I'm just ready to do my cord ends.

And to do those, they're just the little fold-over cord end.

And so, it's kinda hard to see.

But um, you'll put in your chain

and then you'll just fold the sides over

and it will secure your chain end there with no glue.

So I'm going to grab my chain.

And a little trick that I do sometimes

just because they are kind of hard to hold on to,

is that I'll slide my chain in there and then

I put just a little bit of it under.

Like above where the folds are at

and I grab it with my thumbnail there

to kind of hold on to it.

And then I'll take my chain nose pliers

and gently on one side only,

I just start to fold over.

Kind of start towards the back and move to the front there.

So you get one side folded down.

And then you go over to the other side

and then you fold it over.

OK.

Just wanna make sure it's really in there.

So I'm just gonna give it a good smash

from this top side here.

So now it's in there.

There is a little tiny bit that's like hanging out the side.

So I'm just gonna very carefully use my wire cutters

and trim that off, but do not cut off your loop.

There.

So I've got one side done.

Now, I'm gonna just repeat that process

on the other side here.

You don't have to have that little extra bit to hold on to,

it's just easier for me to do.

You don't have to do that, you can just put it in there

and then fold it over.

Again, in the same fashion just fold over one side.

And then, you fold over the other side.

There.

And this has just a little tiny bit of extra chain there.

So I'm just gonna trim it very, very carefully.

Not to cut the loop, just to cut the little bit

of chain that I had extra there.

Alright, so I've got my cord ends on.

Now I'm ready to add my jump rings here

and my lobster claw clasp.

So, I'm gonna go ahead.

I'm using my two chain nose.

I'm gonna open the jump ring

by pulling one side towards me and one side away from me.

Putting it on the cord end here.

And then attaching the lobster claw clasp.

And then, close it.

And then, just in the same fashion,

I'm gonna add the closed jump ring on the other end.

So string on the closed jump ring,

and then attach the jump ring to the other cord end.

And then, close it.

There.

You can find all the products and tools

for this inspiration design at FusionBeads.com.

(upbeat music)

-------------------------------------------

CCSU to make changes to sexual assault reporting - Duration: 2:42. For more infomation >> CCSU to make changes to sexual assault reporting - Duration: 2:42.

For more infomation >> CCSU to make changes to sexual assault reporting - Duration: 2:42. -------------------------------------------

Acquiring and Aggregating Information in Societal Contexts - Duration: 58:33.

>> I'm very happy to have Bo Waggoner here today.

He's currently a postdoc at Penn.

Before that, he got his PhD at Harvard.

He went to undergrad at Duke.

He's actually been interned at MSR in New England,

and interned twice at Google.

He is also a really serious runner.

He even won ACC award for

Athlete of the Year.

So, let's hear what he wants to talk to you about.

>> Yeah. Thanks very much. Thanks for

coming to the talk and putting this together,

especially thanks to Alex for making it happen.

Yeah, I'm really excited to get to talk to you guys.

So, the title of the talk is,

Acquiring and Aggregating

Information in Societal Context.

So, I want to think about a paradigm

that's very familiar to many of us,

especially in Machine Learning.

There's a bunch of information,

we feed it into our algorithms and they

output predictions or decisions.

So, it might be data about the weather,

and the output might be some classifier that

classifies weather as indicating sunny,

or rainy in the next day or whatever.

But while we traditionally like to

analyze our algorithms with this perspective,

actually, there's this recognition that

they live in a context that's bigger than this.

So, context of people.

In particular, the data that's coming

in is data that comes from people.

The data maybe about people in some way,

and the outputs, the decisions,

or predictions, also are affecting people.

So, this raises some really interesting questions.

I think both from a societal standpoint,

how should this be designed when we're

taking into account this context?

There are often really nice technical questions,

which I really like, coming

from more of a theory background.

So first, I just want to tell you briefly

about the kinds of questions that come up,

and then which ones I'll be

talking about today in more detail.

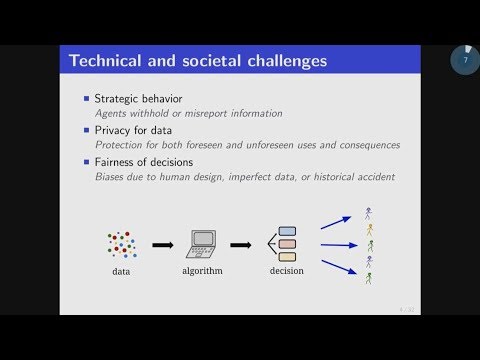

So, I like to

break the challenges down into three rough categories.

So, one is strategic behavior of agents.

So, when you take something like

machine learning type system,

and put it in the context with people,

they may be behaving strategically.

So, this input data you're getting,

for example, might not be the real or true data.

As one example of how they might behave strategically,

they could just misreport data.

They might do that because they care about

the outcomes, for example.

Another big concern is privacy of this data.

So, learning algorithms traditionally

weren't designed with any sort of privacy in mind,

but we often want to now redesign them,

or add this in because people care

about this data may be sensitive or private in some way.

This is a whole field of differential privacy,

and I'll talk a bit about that.

A final aspect, but one I won't get to today,

is this idea of fairness for decisions.

So, the decisions that

the algorithms make affect people in certain ways,

and the traditional approach of just trying to get

high accuracy may have ethical problems,

when they're put in that context of people.

So, it's a really interesting area.

There's this whole idea of fairness in Machine Learning,

but I won't get to that today.

So, instead I'll be talking about

projects from these first two categories.

Okay. So, the outline for the talk.

I'm going to tell you about a project dealing with

strategic behavior in Machine Learning.

So, how to do some learning in a situation where

agents are manipulating the data.

We'll spend more time on that,

and we'll spend a little time talking about

challenges that come up when we try to do

private Machine Learning and a project about sort of

translating theory

of private Machine Learning into practice,

and some challenges that arise,

then some stuff on future directions.

So, that's the outline. Please definitely stop me,

questions, discussion, would all be great.

I'd rather have some really interesting discussions here,

and have a little less time here,

if there are interesting questions to talk about.

Okay, good.

So, here's my overall picture of learning algorithms,

or algorithms in general in societal contexts.

For this first project, I want to focus

on the strategic behavior aspect.

So, this question of how do we do learning when

these people are being strategic about

the kind of data they give to the algorithms.

So, we're going to look at a particular setting,

and I want to motivate it with an application,

an example of detecting credit card fraud.

So, a high level example,

but imagine that over a series of rounds,

agents make a transaction,

and the system sees some data about this transaction,

and it wants to know if that's a fraudulent transaction,

or an honest one.

You can think of maybe

some feature vector is describing the transaction.

So, maybe it is a vector of real numbers or something.

So, the system is going to classify

it as being honest or fraudulent,

and has some classifier,

I'll be using beta for this.

Eventually, the system will learn what

the true status of that transaction is, let's say.

In fact, let's just

simplify things down and assume it learns very quickly,

like immediately, if it was an honest transaction,

or if it was fraud, because it gets

feedback from the account holder.

Then it wants to

update and learn better

classifiers based on this feedback.

So, what's the big challenge?

So, the challenges is that the fraudulent agents in

these systems are going to be

responding to the classifier that you pick.

So, that's why I put this hat on top of XT,

to denote that this future vector we're seeing,

or this transaction we're seeing,

may not be the truth that

these fraudulent agents would

like to have purchased, for example.

So, if there weren't some spam feature,

they just purchased whatever their favorite thing is.

But they're going to be- Instead of spam,

I should say fraud detection, right?

If there weren't fraud detection,

they just purchased whatever they want,

but they're going to be responding.

So, depending on what

the algorithms classifier looks like,

they're going to be buying things in such a way

to try to evade the classifier.

So, they're going to choose

these transactions strategically

depending on what classifier

you're deploying in the first place.

So, here's a picture of what that might look like.

So, if you picture each of

these transactions as a point in space,

we're modeling it as a point in R or d,

and you may have

all these blue points representing honest transactions,

and these red points,

I want you to think of these as the transactions

the fraudster would make if there were no consequences.

So, they'd like to buy, let's

say like chocolate ice cream,

or they'd like to buy a boat, or whatever,

but they're not going to

necessarily get away with that

because there's a fraud detection scheme.

In particular, if we had all this data,

we could deploy some classifier.

It might not be perfect,

but it would classify honest transactions from fraud,

and it would catch this fraud.

Yeah, there are obviously some mistakes.

So, I had a fun incident,

where my credit card was declined making a purchase,

and the location was the city I was living in,

and the purchase was running shoes.

So, I really don't know- if you know me,

that's like most of what I spend my income on.

So, I don't know what was-

These things aren't perfect in practice.

But life is even harder

because if the fraudsters

know that this is the classifier you're deploying,

and let's say in the worst case they

know or they have some good idea,

they're going to be responding

and modifying their transactions.

Okay. So, what you're going to see,

is going to end up looking more like that.

So, maybe instead of chocolate ice cream,

you have to buy strawberry,

maybe instead of a boat, you have to buy

a kayak, or whatever.

But you modify you transaction,

some way they try to increase

your chance of getting past this filter.

So, the filter has to be designed,

this detection classifier has

to be designed with these reactions in mind.

So, this will lead to some,

in game theory, the idea of the equilibrium.

But even knowing that fraudsters are best responding,

you want your classifier to be the optimal thing.

So, this is the perspective people have been taking.

So, there are a couple of papers on this problem.

Let me tell you a bit about the approach that they take.

So, at a high level they'll say,

suppose we have a bunch of data

drawn maybe IID from these populations,

some of it is honest, some of it is

fraud, it's all labeled.

Usually, these papers will suppose they

know the preferences of the fraudsters.

So, for example, one assumption might be

that there's a vector or direction,

and the fraudsters all want to

modify that direction or something like this.

So, using that information,

they can compute this classifier that does

well even given that they're best responding,

the fraudsters are best responding.

So, given that, learn some hypothesis,

some classifier, and it should do well.

So, the work I'd like to tell you about,

is going to try to weaken some of these assumptions.

So, one thing we want to think about

is an idea of agents arriving online,

and without having necessarily a distribution.

So, if you're familiar in Machine Learning,

often there are these two paradigms.

There's a IID data,

and then predicts well on that distribution.

But we instead want to think of

this worst case possibilities.

So, some transaction just arrives.

It's not necessarily from a distribution,

but in the long run we'd like to perform well.

So, after you sum notion of

regret, which I'll tell you about.

Another one is, we

really want to think about these agents,

especially the fraudulent agents.

It's heterogeneous and different.

So, they don't necessarily all want the same thing.

One might want ice cream,

one might want to sail across the river, or whatever.

A final important point is

that we didn't want to assume that

you can see the preferences of these fraudulent agents.

So, we don't necessarily know what they want.

We just see the transactions they tried to make.

So, we maybe saw them buying strawberry ice cream.

We'd like to try to infer from that,

did they actually want chocolate,

but they've modified it because of our classifier?

Or like do they actually like strawberry?

How can we use that to design better classifiers?

I hope that point makes sense.

Okay. So, that's the high level goal.

So, the paper is called strategic classification from

revealed preferences with some wonderful coauthors

at Penn or formerly of Penn.

Okay, and there are

three aspects to it that I'd like to discuss with you.

So,the first is how do we

model these aspects that I just talked about.

So, we have this idea of online arrivals and

limited feedback or limited knowledge about the agents.

And then what should we be shooting for?

And I think there's an interesting question there,

how do we measure how well we're doing?

And I'll explain why it's not super clear.

And then finally, how do we actually

try to solve the problem?

And for this talk with you today,

I actually want to,

I just want to spend a lot of time on

these because I think they're really interesting

and just a little bit of time on our solution

relative to maybe a normal outline.

So, it'll turn out that we can reduce

to known algorithms or

at least leverage them very heavily.

But we put a lot of

thinking and effort into this modeling questions and

I think that's also really

interesting for where to take future directions.

So, question so far?

I'll define the model next. Okay, awesome.

Okay, so in the fraud example I gave you before,

you already saw basically the model.

We'll make it a little bit more precise.

So, each agent that arrives,

they're either a fraudster or

an honest agent and they have

some what I'm saying true features,

which is the transaction they really want to make.

That's what we're thinking of it as.

Okay? And they have some true label.

Which is let's say one if they're

honest and minus one if they're not.

And finally, we assume they have some utility function,

which is measuring what's their utility

for facing this classifier beta or

this system described by beta when

their true purchase that they like to make is

x and they make a purchase x hat.

There are features that they present

to the classifier actually x hat,

so they've modified it in some way.

Okay.

So, what is this trying to capture?

Well, the utility intuitively has some tradeoff,

where if they're getting classified as

fraud or spam for certain,

then they're not going to get much utility.

But on the other hand, if they make

some transaction that they didn't

want in the first place and it goes through,

that's not all that useful to them either,

like strawberry ice cream, right?

So, apologies to anyone who actually likes,

I think I'm safe.

Okay. Good. So, we're

going to assume for this work that

honest agents don't act strategically in this way.

They just make the transaction they want to make.

So, they always said this x hat t.

This transaction actually ends up

happening just equal to the one they wanted.

They're not thinking about fraud detection schemes.

But we're assuming these fraudsters

do act strategically. Yes.

>> So are the fraudsters are being myopic or not [inaudible]

>> Yes a great question.

So, we assume they act myopically.

Yes.

So, we're going to assume that they

best respond with this utility function.

Yes.

>> Are we going to assume with their IIDs?

>> Yes.

So we're not going to assume they're IID for this.

Yes.

I mean I guess it would cover

that case and you could do some result for that.

Yes. Good yes. So we do assume

that the fraudsters will choose

this x hat t myopically

to maximize this utility function.

Yes.

Good. Okay. So we have this online model.

So there's a sequence of rounds.

The system has a current classifier any time,

the agent at that time

arrives and we assume the system observes okay

this transaction that they made or tried to

make and then it will learn this true label.

So for example if it was a fraudulent transaction,

even if they let it through,

the person who owned the credit card

will let them know that

it's being used wrong and they'll flag it as fraud.

Okay, so the system gets to learn

the true label for all the transactions.

Is that clear? It's okay.

Okay. And then we're going to use

some loss function to

measure how well the system performed on this data point.

Okay. So it's a loss as a function of

this classifier that you

were deploying or this hypothesis.

And then the transaction that was made and the label.

Okay. So this is just some function measuring performance

and natural one you might think of is that

this classifier is taking in X hat and just

saying one or minus one honest or fraud.

Okay. And then the loss could

be a loss of zero if it got it correct.

Right. So if it labelled it correctly according to

IT and a loss of one if it got it incorrect.

Okay so that's a natural loss function.

We won't end up working with it because

it's not computationally very

tractable but it's a good one

to keep in mind for the model.

And by the way so this model that I'm

presenting to you just as a heads up is very general.

We're going to end up solving some special case with

some strong assumptions but I think

it's fun to think about this general case so that in

future work people can try to solve more aspects of it.

Okay good. So the data points arrived,

the system had some loss,

the updates to a new classifier,

the next data point arrives.

Okay. Any questions about the model?

Yes.

>> [inaudible] with credit

cards [inaudible] particular person that

can be used in purchasing and the like [inaudible]

all the non- fraudulent?

>> Yes. That seems fair. One-

>> [inaudible].

>> Yes. So the formalism

that at this level I'm talking about can almost

even capture that because this xt

could include the cardholder's history,

somehow it can include their location or other things.

So it could probably include all that in this formalism.

Yes. In practice you might suppose you want to

just separate these problems like for each person.

>> It's like you give up the data points including like

features of the person

and what they're trying to purchase.

>> Yes, because we know

this credit card is associated

to this person or something.

So the other application we're thinking about as

motivation which you may have heard from

my verbiage is spam detection of emails.

So, honest people just

send the emails they want to send and

spammers would like to send

some particular spam email but it will get filtered out.

And so they try to modify it in some way and

maybe it's less effective but it gets

through the spam filter or something.

Yes. And again there you can think about

personalization versus everyone's data together.

>> [inaudible]

>> Yes, great question. Yes. So, does

the fraudster know the exact classifier that we're using?

So, in principle, there's no reason they would,

like if you're thinking about

credit card detection or spam filtering.

There's no real reason that we would publish,

for everyone to see, the exact algorithm

we're using at the current time.

But we're thinking of

that a little bit as security through

obscurity and that that's not

a good defense to assume

that they don't know the classifier.

You might be worried that,

if you think about like spam emails or something,

they send a ton of spam,

they can quickly start learning what

your classifier's doing at the current time.

So, this is a sort of worst

case assumption where they just

know the exact classifier and does respond to it.

So, we're thinking of it as

a worst case security assumption.

Question?

Okay, cool.

So now, I want to talk about measuring performance,

and why it's not obvious how to do that,

but how regret can be used.

So, the key ideas is that we're assuming that

these fraudulent agents are best responding, okay?

So, if they're honest, as I said,

they just make the transaction they want, but if not,

so let's define this function x hat t of

beta to be the best response.

So, the thing that the agent actually does,

the transaction they actually make, right?

So for the honest agent,

they just make the one they wanted,

but the dishonest agent is

doing some utility maximization.

So first, let's just define our performance.

So, this would be something pretty familiar and

normal in these machine learning settings.

So, we're going to take an average over all time,

capital t time steps,

of the loss that we experienced at that time step,

which is measuring how well did our classifier beta t do,

when the agent played x hat of

t of beta t. They responded to it,

and this was the true label.

Okay, so that's fine.

But normally, in this kind of model,

you look at regret as

compared to the best thing

you could have done in hindsight.

And now, there's a small wrinkle.

So, it's not really fair to

look at all the fraudulent transactions that were made,

and put them as dots like I did and then just say, "Oh,

If we had drawn this classifier,

we would have classified them all correctly."

So that's not fair because if

we had deployed this classifier,

they would have been modifying

their locations to respond to it.

So we can't just look at the past data and say,

something that did well in the past data,

would have actually done well if

deployed because if deployed,

the agents would have responded.

Okay. And so, this leads to this idea

that we want to compare to

the best fixed classifier in hindsight,

as we often do in these learning settings

but if we had used a different one,

they would have responded differently.

So, we need to compare to how it would have

performed had the agents responded to it.

What's the best thing in hindsight

knowing the agents would respond.

Okay. So, this was our performance

and then this is what OPT should look like.

The key point of this discussion is

that if we have deployed beta star the whole time,

we would have experienced loss of

beta star on the agent's responses to beta star.

So, this looks a little bit different

than the standard online learning where you might say,

"Take all of these x-y pairs and

plug in the performance of

beta star on those same x-y pairs.

This is taking into account that the

x's would actually look different.

The difference between these two performances

is just called the average Regret.

In the paper, we use this term, Stackelberg Regret.

The term comes from Stackelberg games in game theory

where a leader commits to

an action and the follower best responds.

So in this case, the follower is

best responding to the classifier.

So this notion of regret,

you can actually find in

previous papers in other literatures, though.

So things like security games and probably

if you're looking at online pricing

and auctions or something,

they would be using this kind of notion.

Although, we haven't seen it given a name

but we think Stackelberg Regret is a good name.

>> Is it still [inaudible] partial monitoring because I think the

enterprise literature on [inaudible].

>> Okay.

>> Like they have generalisation matrix.

>> Okay. Yes. So, it

is natural if you're thinking about this problem.

So, it's not surprising that people

have defined it in different places.

Good. But an interesting point about it is

that you can't- Yes, go ahead. Sorry.

>> So then the y's are also different, right?

>> No. So, that's important.

So, think of each agent that arrives, each x-y pair,

they're either a fraudster or they're

honest but they change.

If they're fraudster, they change their x.

>> They change their transaction.

>> They change their transaction x but their y is still,

were they honest were they not.

Yes, and one thing

that's interesting about our model

is that it's really baked in,

like their label is whether or not they're strategic.

So, that's an interesting aspect.

>> Do you need any assumptions on what fraction is fraud?

>> Good question.

Yes. So, we don't

need assumptions on what fraction are fraud versus not.

One thing we tried to do in

an extension is perform better

if there's a small amount of

fraud and you'll see briefly why.

So, a key point that's interesting

is if you just have the past data in front of you,

you can't really compute this OPT.

You have to know what these agent's utility functions

were in order to compute how they

would have responded if you had done something different.

You don't actually know that. You don't

know what their utility functions were,

you just got to see what they actually did on that day.

But we'll show how to compete with it,

how we can have an algorithm

whose performance competes with OPT,

or at least under some

assumptions under which you can do that.

I guess, I wouldn't be

surprised if everyone knows what average Regret is.

But just to be sure,

the point is that if average regret is going to zero,

then your loss per day is approaching that of OPT.

So that's good. So, you want

this average regret to go to zero.

If it goes to zero very fast, that's better.

By fast, I mean with t. So we're

often interested in average Regret as a function of time.

Okay. All good?

Okay. So now, let me tell you.

So, I tried to set up this relatively general question.

Let me tell you this specific setting

that we're able to solve for this paper.

So, we're assuming some linear predictor.

So, beta t is also

a vector in r to the d. The prediction,

you can think of as this number, beta.x.

Okay, so you can think of

positive means honest and negative means dishonest.

Very positive means I'm very

confident that this agent is honest.

Very negative, very confident they're dishonest.

So, we think about

logistic loss and we can also do hinge loss,

but we just focus on those two loss functions.

Okay. So this, I guess,

most people have seen this before but again,

this beta.x, this number,

if it's positive it thinks they're honest.

So if it matches y, if the sign of this is y,

then you have low loss.

If the sign doesn't match y,

so you predicted positive but they're actually fraud,

then you have a high loss, roughly.

But it's sort of a soft version

of zero one or it's trying to

be some soft version of zero one. All good.

Okay. So now, a very key assumption that we make,

what does the utility look like of these fraudsters?

What are they trying to do?

Okay, we assume that their utility of the form beta.x.

So, being classified as more positive is better for them.

So, the more honest

that they're perceived to be, the better.

But they pay some cost.

So notice this is beta.x-hat.

So, this is what the classifier predicts for

them but they suffer

some costs for having to modify from x to xn.

So, they have to trade off these two things.

We make some assumption on the class of

distance functions that are

measuring the cost for making this modification.

>> [inaudible]

>> Yes, good. So yes.

The reason is that we want to allow

heterogeneity among time steps.

So, an agent today

may care about different things than the agent tomorrow.

This is measuring that to say that,

like the agent today doesn't really mind switching

between chocolate and strawberry,

but the agent tomorrow minds very much.

So they have different distance functions.

>> What is the rationale of putting

this kind of disconnect when

you are putting it through the link,

one link for one agent but the other agent?

>> Do you mean,why one, the honest agent?

>> Looks linear.

>> Sorry so.

>> So, like the other loss

has this kind of hinge like form.

>> This one.

>> Right, whereas the process utility

is just fully of linear.

>> Yes. I see. I see.

So, maybe it wouldn't make

sense for their utility to be like the negative

of our loss or something like the worse we do the

happier they are or something.

>> [inaudible].

>>Yes, so it'll turn out that this is very

important for our whole approach, this particular form.

Yes, it would be

very interesting to be

able to solve something else like that.

I think one thing to mention though is,

one thing that keeps us really interesting is that it's

not a zero-sum game,

which your proposal would also not

be zero-sum because of this, I guess.

If it's a zero-sum game,

I think things are just less interesting because, yeah.

>> Is it realistic to assume this a utility function?

>> That's a great question, yes.

>> So one reason is to say like okay this

the best we can do technically.

>> Yeah.

>> Now does this merge anything.

>> Yeah.

Yeah, so we don't really know.

We don't have a particular scenario or like

we looked at these agents and they

definitely have this utility.

We can try to understand when this would be reasonable.

We don't think the answer is never.

So we think there are some scenarios.

So the most reasonable thing.

The first thing you would think of

probably could be like a 1 0.

So like my utility is one

if I got off my transaction went through.

So I was incorrectly classified and 0 if it didn't.

That would be maybe the most natural positive thing here.

But yeah go ahead.

>> [inaudible].

>> Well, yes.

So one thing we were thinking about is if

the classifiers using some randomisation

that's proportional to this.

So I'll allow things to go

through maybe with probability proportional

to the certainty of a classifier

then that this actually does make sense as

a utility could be.

But yeah, the main if you'd

go for something else from this

we don't know technically how to solve it.

So it would be great to do that.

>> I just wanted to mention that the 0 1,

I'm not necessarily sure that that's

the most natural thing because the thing where you

said before I guess it's unreasonable to assume

that attackers have a precise knowledge of data, right?

Here is also reflecting

that like the attackers want to make

sure be confident about

transactions will be classified as-

>> Yeah, thanks. That's something I forgot to

mention but is a great justification.

So yeah if you don't know

but you know that the higher this is the more

likely you are to be to pass through the filter then you

just try to maximise this that's some justification.

>> I guess it's not obvious to me how this

captures just like the inherent value of x or x half.

If x is like, I don't know,

like a 51-foot yacht and x half is a 52-foot yacht.

I don't really care.

Like they both have incredibly high value.

>> Yeah.

Yeah. So in this project

we're at a very high level of abstraction

and it's not clear what

the space are to

the deep space these things live in mean.

>> But is there a way, I mean

like some x hat are just going to have

intrinsically higher value or

some xs are just going to

have intrinsically higher value.

It seems like the only place that can

be captured in the prediction part.

>> Yeah. Yeah. So or maybe another,

I was going to say maybe you

could just have a coefficient in front that's

like how much I like

getting it through but you're right because I get

different utility for

different transactions going through.

Yeah. So yeah that may

that might not be captured by

this. That's really interesting.

>> Couldn't the distance

metric also include dollar amount?

>> Yeah. So yeah we can at least make these comparable.

Yeah. So you could sort of say,

yeah actually maybe this coefficient can

depend only on my true xt.

And then the distance is saying like you know

my distance from 51-foot yacht had

the value of 51-foot yacht minus

something number of feet.

Yeah. So yeah, and I'm glad we're having

the discussion because this is

a great direction for future work

can you what else can you

do besides these assumptions we're

making is really interesting.

Okay, so and our approach isn't going to work for

all Dt but for

some relatively large set of especially norms

of the of x minus x set and so this is

a pretty general almost completely general example

of the kinds of distance functions we can have.

So maybe have some matrix A and you take Ax and you

take Ax hat and you look at the distance

between them in some p norm

and you raise that to a power.

It's actually important for us that

this power be strictly greater than one.

The reason for that is actually intuitive.

There's this idea of a convexity involved.

And convexity is capturing diminishing returns.

So if I move this

a certain distance away and I

move that same distance again,

it's even more costly

and we actually need that to get results.

Otherwise you could have some,

this might not have a maximum.

You might be able to get

arbitrarily high utility by

moving farther and farther away arbitrarily.

Okay, good. So the main result

is under these assumptions

and in this setting that I told

you about we can reduce to

some online convex optimization algorithms

and use that to guarantee some regret.

So let me just really briefly tell you

that result and the idea of it.

So think of this loss at time

t as just a function of the classifier that

you chose to deploy at time t.

So that you get that

by plugging in beta in several spots here.

But think of it as one big function of beta.

Okay.

Well, if we can get that function to

be convex as a function of beta,

then we can apply things we know how to do.

Convex optimization.

Okay, and so that's what we show is true and

main tools using some convex analysis

which details which I

don't think are important for this talk,

so I'll skip it.

But so the corollary is

by applying various convex optimization algorithms,

you can get regret that's going to zero

as t increases some very fancy one you can

even get I think one over root t or one over t to

the two-thirds with better dependence on dimension.

Okay and this is despite I should emphases not

knowing exactly what this lost function

is that arrive right.

So we don't know this whole,

wo we have now a sequence of loss functions at each time.

They're each different.

And we don't know what

that entire loss function is

because it depends on their utility.

We just know that it's convex.

And there are convex optimization algorithms

that actually work in that setting.

>> [inaudible].

>> Yeah, I think so.

Yeah. Yeah. Good. Yeah, and so, sure.

Yes. So just quick extensions and future work.

Okay, so an extension

is you can treat these honest updates and

fraudulent updates differently and

do a better job with your algorithm.

The reason is that when an honest agent

arrives you actually do

know this convict's last function,

because it's just your last function.

If you change beta the thing

the data they gave you is not going to change.

So you can computer gradient

and you can do it fast a good update stuff.

When the fraud fraudulent update occurs,

you can't do that and you have to do something that

doesn't use gradient information.

If for those of you who are familiar

with such algorithms if not it's not a big deal.

Okay, so we have somewhat more general agent utilities

and also hinge lost.

The ideas are the same

but there's tons of room for future work.

I think it's really interesting as we've been discussing.

So other loss functions definitely.

Other forms of agents utility

definitely really interesting and especially if,

so you know our whole general approach

here once we formulated the problem ended up being

to reduce to this convex optimization which we

know how to do in this online setting.

So I mean one way to push on this is to just

say well here's another case

where we can use convex optimization.

Here's another case to go outside of that I really

don't know what approach you can

take that would be really interesting as well.

Okay. Yeah, go ahead.

>> So I guess one thing that makes me slightly nervous [inaudible]

optimization is that it

actually becomes really crucial that

when the attacker is in fact best responding to

the real knowledge of your data, right.

And so if they were working

with some sort of imperfect knowledge

about what I'm doing or

if I just like one very common thing

that goes on in these algorithms is you

have your current set of parameters and

because you can't observe a gradient

you randomly perturb it.

So there is no way in hell they

can know what random [inaudible] I actually applied.

>> Yeah.

Yeah, no, that's a great point.

Yeah. I think that's a disconnect between

our model and how you would deploy this in reality.

So maybe for the recording

I should say so the question was,

this is really relying on agents exactly best

responding to the exact beta we deploy.

And you're correct, we're using

these convex optimization algorithms that perturb

data a little and see how the gradient or see

what happens and use that to guess the gradient.

So if agents aren't best responding to the exact data

including this perturbation then

it's not clear this is going to work.

Yeah, I think another,

maybe there can be some way to get

around that by if you make

some assumptions about agent utilities,

you could guess that maybe

the gradient like locally you can guess

that they won't change too much and

then just use the gradient of your own loss.

So yeah, it should be addressable I would hope,

but not in this paper.

It's a great question.

>> Anything else, yeah.

>> I was just trying to think as at

a current price or whatever my utility would be.

It seems like it would be like a lot related to

the dollar amount of the item or

the dollar amount you could get from selling it.

So probably some things are easier to sell than others.

>> Yeah.

>> So, maybe if you could run like, if it all comes out,

suppose it all comes down to one number like

the dollar amount and you can sell something for,

will that simplify it what you do with it?

>> Yeah. Maybe, yeah. I think this is really

similar to the previous question about,

could we sort of this utility

doesn't seem to directly depend on something

like dollar value amount but could we

somehow argue that that's

a special case where there is

a coefficient which is the dollar amount of x.

>> [inaudible] second terms, you could just put them in dollars.

>> Yeah. So it seems to me possible.

Well, okay.

So we wanted A to be invertible but if you think of maybe

mapping these purchases into dollar amounts, okay?

If it's mapping down to

one dimension maybe it's there's a problem with

our formalism but it seems possible.

But yeah, when I don't want to claim this till

he is capturing too much because it's

a pretty special form so I'm not sure.

Good. Okay great. So yeah.

So, while I have you I'd like to give you

a different example of this machine

learning in societal context which is going to be

privacy focused example and much briefer of course.

Yeah. So, strategic behavior

was one really interesting aspect of this idea.

Another one that I'm interested in is this idea

of, maybe the agents,

they don't have a particular concern

about what this classifier is

doing so it's not like you know it's

classifying their own transactions.

But there is this privacy concern that it's going to

reveal information about my data

and differential privacy is a field which turns out to

be some very nice theory

and also moving into practice for addressing this issue.

So, I think I can probably guess that

everyone has seen differential privacy

a few times or many times.

So, I hope you don't mind, I think I'll

skip the definition.

It's actually not that important

for the level I want to tell you about this project.

The high level idea is you take your algorithm should be

randomized and by adding noise or being randomized,

reveals very little about the data

because a change in one person's data,

doesn't make much difference in the output you

see in terms of probabilities of different up.

That's a high level if you know that,

then you know enough to follow.

So, let me just skip this thing. It's fine.

Okay.

So, what do we want to address

in this project I'm going to tell you about briefly.

So, differential privacy, it's pretty well

understood and practical I would argue for

answering statistical queries about a data set.

So, what is the meaning of this data set?

Or something like that or different queries

about some complex data

but even being used in the census which is pretty cool.

And the most basic approach is that, okay.

So there's this parameter Epsilon differential privacy,

if you add noise that has

standard deviation like one over Epsilon,

then you're preserving Epsilon

differential privacy intuitively.

So, Epsilon very small,

is very private but lots of noise.

So, we understand all that very well.

Machine learning queries and data is very interesting.

So, there's more recent work.

It's quite active but I would argue it's

not well understood at least not in

the way that statistical queries are.

Because the kind of theory bounds we

get might be quite loose because we're

using these complicated algorithms, right?

So this algorithm is,

take the true number, add some noise, okay?

And then we can analyze how accurate that is.

If you're talking about something like

a stochastic gradient descent with

extra noise added to preserve privacy,

understanding how private and accurate that really is,

it's going to be very difficult

and often these papers are written by theorists.

So, who are great and I love theorists, okay?

But their theorems tend to look like,

I should say, our theorems tend to look like this.

They tend to look like the following.

Let's say like you prove

some general theorem that says you run

our algorithm with privacy level Epsilon and I'll

guarantee you an accuracy Alpha with high probability.

And often, you might

prove something with a consonant that looks like this,

more often you prove a

constant that looks like this, right?

So why is this an issue?

I think it's an important idea.

So people do this in algorithms.

But you put the algorithm in

practice and it runs really well,

and it doesn't matter you know the fact that you couldn't

prove a low constant is not a big deal.

But here, I want to argue it is an issue.

So, let's say you're an engineer at

some data corporation and

you want to run a differentially private,

you want to run this algorithm.

Well, you have to choose the privacy level you're

guaranteeing the people, okay?

And the problem is that theorem

isn't really going to help you that much in

figuring out how you actually choose it.

If you want an Alpha.01,

should you set Epsilon to be 100,000?

That will guarantee you an Alpha.01

but there's no privacy at all basically.

We want Epsilon to be around one or less.

On the other hand, you could probably

could get away with setting up Epsilon to one

because probably there's all these

loose constants in there but

you don't know and it can be hard.

And what if accuracy is critical to

your system so you can't afford to take chances?

Okay. So this project and this is a paper it

nips last year with some wonderful co-authors and Steven.

I'm sorry, and that's the most wonderful of them all.

So the question is,

if we have an accuracy requirement,

can we run a learning algorithm that tries to find

the most private possible output that we can

have without having to

tell it what Epsilon and Alpha to shoot for,

or sorry what Epsilon to shoot for,

it will try to satisfy

this accuracy requirement but be really private, okay?

And so, the setting

is what we call empirical risk minimization.

So, you just have this data set and you

have a last function and you

just try to find an hypothesis

that minimizes loss on the data set, right?

Okay.

So, I'm just going to give you